The rise of internet-based therapy has the potential to transform mental health care, making it more accessible and personalized. Among the various digital solutions, therapy chatbots like ChatGPT are gaining attention because of their potential to provide conversational support.

This article will explore some of the promises and pitfalls of ChatGPT-mediated therapy.

Key Takeaways

- ChatGPT and other AI tools can offer guidance and emotional support, but they can’t replace the expertise and personalized care of licensed therapists.

- For serious mental health concerns, consulting with a professional therapist is still necessary to receive tailored treatment and long-term support.

- The integration of AI in therapeutic interventions is an emerging field with both promising developments and important limitations to consider.

- Individuals seeking mental health support should carefully evaluate the appropriate use of AI-driven tools and seek professional help when needed.

- The future of AI in mental health care will likely involve a collaborative approach, where AI-powered technologies complement the expertise of human therapists.

The Rise of Internet-Based Therapy

The rise of digital therapy in recent years has revolutionized how mental health services are being delivered, making them more accessible, scalable, and personalized. This trend has been driven by technological advancements and a growing demand for mental health services.

A significant development in digital therapy includes therapy chatbots. These bots are often powered by large language models (LLMs), like GPT, which are trained on vast amounts of text data, including information related to mental health.

This could include cognitive behavioral therapy (CBT) techniques, self-help guides, and therapeutic dialogues.

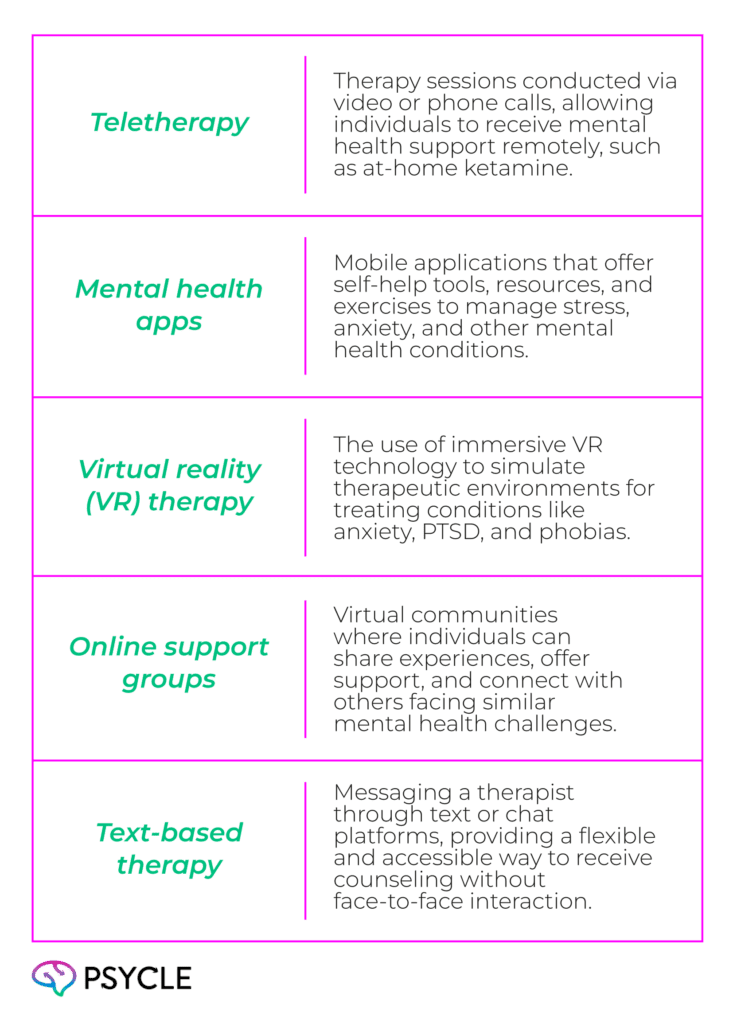

Therapy provides conversational support to people struggling with their mental health and may be useful for people hesitant to go to in-person therapy. As well as chatbots, other internet-based therapies which have grown in popularity over recent years include:

Prevalence and Treatment of Depression

Depression is a widespread global mental health issue, affecting an estimated 5%of the worldwide population. It is a leading cause of disability, and the global burden is increasing every year as cases continue to rise.

Despite its prevalence, many people with depression do not receive adequate treatment due to barriers like stigma, lack of mental health professionals, or high costs. In low- and middle-income countries, access to effective mental health care is particularly limited, further exacerbating the problem.

Therapy bots could be a solution to increase therapy access to those with depression. They can offer 24/7 support, making mental health care more readily available to people across different regions, economic backgrounds, and time zones.

Can ChatGPT Do Therapy?

ChatGPT is the most widely used language model. It is designed for general purposes rather than specializing in therapy like some AI mental health bots. While it’s trained on a wide range of information, including mental health topics, it isn’t specifically tailored for therapeutic use.

However, it can still provide support by offering a space for people to reflect on and explore their thoughts and emotions through conversations. ChatGPT can also provide advice on coping strategies like mindfulness and stress management.

Some evidence shows ChatGPT can be therapeutically useful. In one small study, twenty-four mental health patients were instructed to use ChatGPT to manage their condition. Sixty percent of participants said ChatGPT helped them understand their condition, and fifty percent said they felt emotionally supported by the bot.

According to a study from the operating system Tebra, 80% of people who sought mental health support from ChatGPT thought it was an effective alternative treatment to regular therapies.

Empathy and Emotional Intelligence Challenges

ChatGPT faces significant challenges regarding empathy and emotional intelligence, which are critical in therapy. While it can respond with empathetic language and offer advice on managing emotions, it doesn’t truly understand or feel emotions like a human therapist.

Emotional intelligence involves recognizing subtle emotional cues, adapting responses in real-time, and building a deep understanding of a person’s emotional state—all areas where ChatGPT falls short.

As a result, while ChatGPT can provide some support, it lacks the depth of emotional attunement and adaptability necessary for effective therapy.

The Therapeutic Alliance and Client Preference

The therapeutic alliance is the relationship of trust and mutual understanding between a therapist and client and is a key factor in successful therapy. This is something ChatGPT cannot fully replicate.

This alliance is built through personal rapport, emotional attunement, and shared goals, which require genuine human connection.

Additionally, client preferences may include things ChatGPT cannot replicate, such as face-to-face interaction and nuanced emotional support.

Transparency and Trust in AI-Generated Messages

Therapists are bound by strict ethical guidelines, such as confidentiality, informed consent, and a duty of care to their clients. ChatGPT, on the other hand, is an AI without the ability to ensure these ethical standards are met. This can compromise trust in sensitive therapeutic contexts.

Another concern is the validity of the data ChatGPT is trained on, which includes a vast amount of general information from the internet. This data may not always be reliable or clinically accurate, raising questions about the appropriateness of advice or responses generated by the AI in mental health scenarios.

As a result, while ChatGPT can offer general support, it lacks the ethical and data integrity needed for effective, trustworthy therapy.

The Role of AI in Therapeutic Interventions

Although ChatGPT may not be able to replace therapy, it has the potential to support the psychotheraputic process. Therapy clients could use it to process their emotions and stressors, for instance, by asking ChatGPT to summarise or make sense of their feelings.

This could then be used as material for an in-person therapy session.

Moreover, people can ask for advice about coping strategies outside of their therapy sessions when stressful events occur.

Therapists, as well as clients, may benefit from using ChatGPT. For instance, they could use ChatGPT to quickly access information about mental health topics. However, it’s important they verify this with official sources or other experts before giving patients advice.

Therapists can use ChatGPT to help with administrative tasks, such as drafting session notes, creating treatment plans, or generating client reminders.

Evidence suggests that ChatGPT could help identify suicide risks based on client presentations. Therapists might consider using ChatGPT when they suspect clients are exhibiting risky behaviors.

However, it’s essential for therapists to also use their own intuition, expertise, and consultations with other professionals to validate any conclusions regarding psychiatric risks.

The Future of AI in Mental Health Care

The future of AI in mental health care is promising, with numerous therapy bots being developed to enhance emotional support and therapeutic interventions.

For instance, bots like Woebot and Wysa are specifically designed to provide evidence-based therapeutic techniques in an engaging and user-friendly manner. Additionally, some platforms are exploring the integration of voice recognition and natural language processing to facilitate more nuanced conversations.

As research progresses, these specialized bots aim to complement traditional therapy. However, it is essential that these advancements maintain a focus on ethical considerations, ensuring user safety and data privacy while delivering effective mental health support.

FAQs

Can ChatGPT Replace a Therapist?

No, ChatGPT cannot replace a therapist. It can provide support and resources, but it lacks the emotional intelligence, empathy, and ethical responsibilities that are essential for effective therapy.

How Can Therapists Use ChatGPT?

Therapists can use ChatGPT to access information on mental health topics, assist with administrative tasks, and help clients process emotions between sessions. However, they should verify any advice with trusted sources.

Is ChatGPT Safe for Discussing Mental Health Issues?

While ChatGPT can offer general support, it is important to approach its responses with caution. Users should seek professional help for serious mental health concerns and not rely solely on AI-generated advice.

What are Some Examples of Other Therapy Bots?

Examples include Woebot and Wysa, which are designed to provide evidence-based therapeutic techniques and personalized support to users.

Source Links

- https://journals.lww.com/indianjpsychiatry/fulltext/2023/65030/Artificial_intelligence_in_the_era_of_ChatGPT__.1.aspx

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10235420/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10427505/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10838501/