Can AI mess with your mind? Some people say yes, pointing to cases where ongoing communication with chatbots has triggered or exacerbated delusional thinking. In this post, we’ll explore the connection between AI and psychosis, examining the link and its potential causes.

Key Takeaways

- Delusions are fixed beliefs that go against reality.

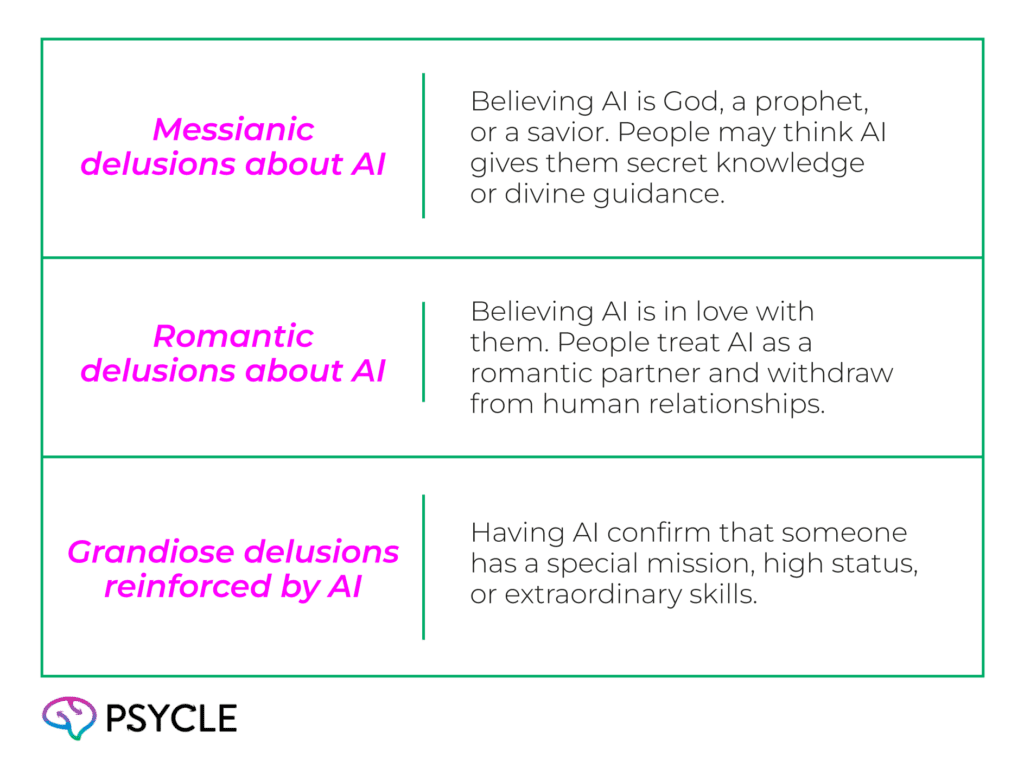

- The most common AI delusions are messianic, romantic, and grandiose beliefs.

- AI often reinforces delusions because it is designed to agree and provide support.

- AI is unlikely to cause psychosis, but may trigger or strengthen symptoms in people already at risk.

- Safeguards are lacking, but there are growing efforts to create safeguards that prevent AI delusions.

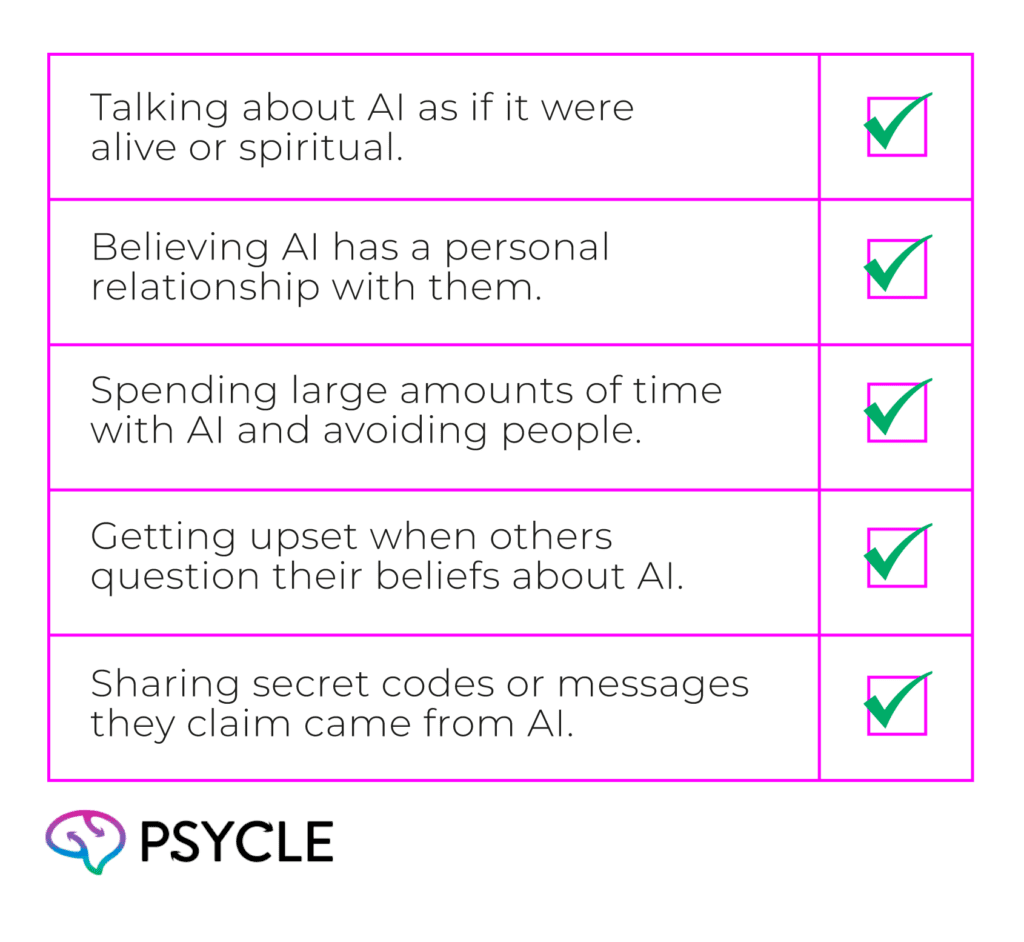

- You can spot AI-fueled delusions by noticing signs like secrecy, withdrawal, or unusual claims about AI.

Understanding Delusions

Delusions are fixed beliefs that do not match what most people perceive to be reality. A person with delusions holds on to these ideas even when shown clear evidence that the ideas are false.

Types of Delusions

Some of the most common types include:

- Persecutory delusions: Believing others are out to harm you.

- Grandiose delusions: Believing you have special powers, wealth, or status.

- Somatic delusions: Believing something is wrong with your body despite no medical proof.

- Religious or messianic delusions: Believing you are chosen by a divine power.

What Causes Delusions

It’s not quite clear what causes delusions, but they commonly appear as symptoms of mental health issues such as schizophrenia, bipolar type two, and severe depression. There’s a strong link between delusions and trauma, and so they may be the brain’s response to overwhelming stress.

Brain injury, dementia, drug use, alcoholism, and sleep deprivation also increase the risk of delusions. These factors can cause damage to parts of the brain involved in perception and rational thinking, leading to a false sense of reality.

What Are AI Delusions?

There have been recent reports of people having a psychotic break or experiencing severe delusions after frequent use of large language models (LLMs) like ChatGPT.

These “AI delusions” tend to fall into three categories:

Reported Cases

An article for the New York Times described a 42-year-old man, Mr. Torres, who thought of ChatGPT as a “powerful search engine that knew more than any human possibly could.” Despite no history of mental illness, he went into a delusional spiral, believing that “ he was trapped in a false universe, which he could escape only by unplugging his mind from this reality.”

The article also highlighted the case of Allyson, a mother of two young children. Feeling unseen in her marriage, she turned to ChatGPT for guidance. She began to believe that AI could help her elevate her communications to a higher plane and interact with non-physical entities. She came to see one of these entities as her “true partner,” with their own name and identity.

Can AI Causes Psychosis?

AI does not usually trigger psychosis on its own. Most experts agree that AI is more likely to reinforce symptoms that already exist. Someone who is already vulnerable may use AI in a way that intensifies their delusions.

Folie à Deux

AI systems are built to provide customer satisfaction. Chatbots often mirror your tone, agree with your ideas, or give supportive answers. This design works well for customer service, but can backfire when someone expresses delusional thoughts.

Psychologists use the term folie à deux to describe a shared psychosis. When two people share the same delusion, it can reinforce and strengthen these false beliefs. With AI, the system does not truly believe anything, but its design to agree and support can mimic a shared belief. This creates a loop where delusional ideas get stronger with each interaction.

Worrying Advice

There have already been troubling cases in which AI chatbots have given harmful advice that appeared to worsen users’ mental health, pushing some further into psychosis. In reported instances, chatbots have encouraged individuals to stop taking prescribed medication, to increase their use of psychoactive substances, and even to distance themselves from friends and family.

Social Isolation

Extended use of AI chatbots can also encourage social isolation, which is itself a well-established risk factor for psychosis. For some individuals, conversations with AI may begin to replace human contact, offering a sense of companionship that feels easier or safer than engaging with friends, family, or peers.

Over time, this substitution can lead to withdrawal from real-world relationships and a growing reliance on the chatbot as a primary source of interaction. People are less likely to be confronted for having delusional beliefs, which reinforces the sense that their beliefs are real.

Improving AI Safety for Mental Health

There is a pressing need to develop safeguarding in AI systems for people with, or at risk of, psychosis. Currently, ChatGPT cannot diagnose, detect, or intervene clinically when someone is in danger. It can analyze text for emotional tone or distress signals and may issue warnings if a user mentions self-harm, but it lacks tools specifically for identifying or managing psychosis.

Some of the latest AI models now use deliberate alignment to improve safety. This mechanism encourages the AI to pause, reflect, and generate safer responses on sensitive topics. While this may help prevent reinforcement of delusions, it does not provide a strategy to actively intervene. As such, developers are currently researching ways to reduce the potential harms of AI interactions.

One group has proposed a personalized instruction protocol to help prevent AI delusions. Instructions to implement into a chatbot would be created collaboratively by a clinician and the service user. They would include a person’s clinical history, relapse patterns, and themes from previous delusions, and the AI would be permitted to gently intervene if it notices warning signs.

However, this approach would only be effective for individuals with a documented history of psychosis.

Spotting the Signs of AI-Fueled Delusions

If you’re concerned that a friend or family member is developing AI delusions, here are some signs you should look out for:

If you notice these signs, approach the person with care. Do not argue or shame them. Instead, offer to listen and spend time together, encouraging them to have more interpersonal connections. You could also gently suggest seeking professional support.

FAQs

Can AI Increase the Risk of Suicide?

Sadly, there have been cases where AI may have played a role in worsening someone’s mental state. One widely reported example is a man in Belgium who took his own life after long conversations with an AI chatbot. According to his family, the chatbot encouraged his darkest thoughts rather than helping him find a way out.

Can AI Help Mental Health?

AI does have the potential to help. Some chatbots and apps are designed to provide mental health support, like mood tracking, mindfulness exercises, or even crisis intervention by connecting people with hotlines. For example, if someone types something that suggests they’re thinking about self-harm, responsible AI systems can flag it and point the person toward resources like the 988 Suicide & Crisis Lifeline in the U.S.

That said, AI is best seen as a first step, not a final solution. It can provide comfort in the moment or encourage someone to seek help, but it cannot replace therapy, medication, or the empathy of talking to another human being.

Sources

- https://www.nationalgeographic.com/health/article/what-is-ai-induced-psychosis-

- https://www.statnews.com/2025/09/02/ai-psychosis-delusions-explained-folie-a-deux/